Cluster computing is a computational model designed to solve problems using a large number of computers organized in a telecommunications network.

Cluster computing refers to a computational model, and also to the use of distributed systems, and the technologies that come with it, to solve computational problems. In cluster computing, each problem is divided into many tasks, each of which are solved by one or more computers that communicate by exchanging messages. Next we are going to explain the fundamentals of cluster computing and analyze its important applications, such as the Internet and big data.

Cluster Computing Model

A computational model describes how a system calculates and generates the result of a mathematical function after receiving some input data. In the case of the cluster computing model, these operations are performed by a group of machines that collaborate together. The group of computers share resources, in such a way that the group is seen by the user as a single computer, more powerful than any of the machines that compose it. These groups of computers are called a cluster, and each one of the machines that make it up is called a node.

So, connecting a few computers I have a cluster?

For a cluster to function as such, it is not enough to connect the computers together, but it is necessary to provide a cluster operating system. This cluster control or management system is responsible for interacting with the user and the processes that run in it to optimize operation.

Unlike grid computing, computer clusters have each node performing the same task, controlled and scheduled by software.

Also read: Computer Programs and Software - Types, Classification And Examples

Advantages and Disadvantages of Cluster Computing

This way of solving problems is much cheaper than making a machine as powerful as it takes to solve a problem. In addition, clusters provide a great improvement in performance or availability when compared to just one machine. As they say, unity is strength.

On the contrary, among the disadvantages we have that these systems are more complex and require more knowledge to configure, maintain and work with them.

Characteristics of Clustered systems

Clusters are usually employed to improve performance or availability over and above that provided by a single machine, typically being more economical than individual computers of comparable speed and availability. The construction of the cluster computers is easier and cheaper due to its flexibility.

A cluster is expected to present combinations of the following services:

- High performance

- High availability

- Load balancing

- scalability

Clustered system

A clustered system is a system whose components are located in different interconnected computers, and which communicate and coordinate their actions by exchanging messages between them. Components interact to achieve a common goal.

There are many types of implementations for the message exchange mechanism, such as HTTP, RPC, or message lists.

Cluster Computing Programs and Algorithms

A clustered program is a computer program that runs a distributed algorithm on a clustered system. While a distributed algorithm is an algorithm designed to run on a distributed system. The most common problems solved by distributed algorithms are the election of leaders, consensus, distributed searches, generation of spanning trees .), mutual exclusion, and resource allocation. Distributed algorithms are a subtype of parallel algorithms, which typically run concurrently. In distributed algorithms, the parts that make up the process are separate and run simultaneously on separate processors, each having limited information about what the other parts are doing.

Types of cluster computing architectures

In cluster computing we can differentiate two types of architectures based on the centrality of the network as a classification criterion: centralized and decentralized. This classification will influence the decision-making model of the cluster. Although each tool has its own architecture with its peculiarities, we are going to use this differentiation to classify them.

Next, we are going to discuss two of the most common architecture models in Big Data: the master-slave architecture and the p2p architecture.

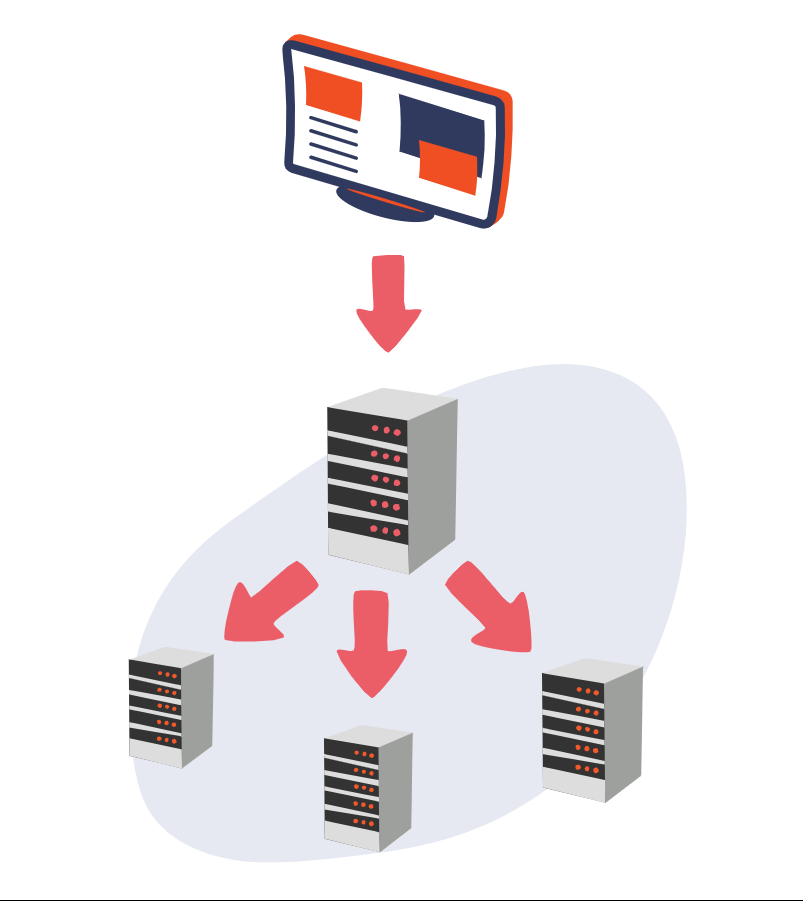

Master-slave architecture

It is a centralized distributed architecture model , in which we have a hierarchical organization between the machines, differentiating two classes of nodes in the network: masters and slaves. We call master node or master the machines that coordinate the work of other machines; and slaves, workers or executors to the machines that perform the work that the master node assigns them. The master node serves as the communication hub between the worker nodes and the system requesting the result, be it a user or another process.

Among the advantages of this model we find scalability , since we can increase the capacity of the system by adding more worker nodes to the network, and fault tolerance , since if one of the worker nodes fails, the system is capable of recovering by distributing the work of the affected node to the rest of the workers while it becomes available again. In addition, decision making is greatly simplified as the master node is the center of all communications and orders.

However, this centralized model also has some drawbacks, and we find them in the master node since on the one hand it is the single point of contact (SPOC - single point of contact) and at the same time the single point of failure (SPOF - single point of contact). of failure). If the master node fails, we lose the entire system. For this reason, it is common for this architecture to be supported with a multi-master mechanism in which there are other master nodes to replace the one that is operational in case of failure, this provides availability to the model.

This architecture is widely used and among its multiple applications it stands out for being the basis of the client-server model , fundamental in the development of the Internet.

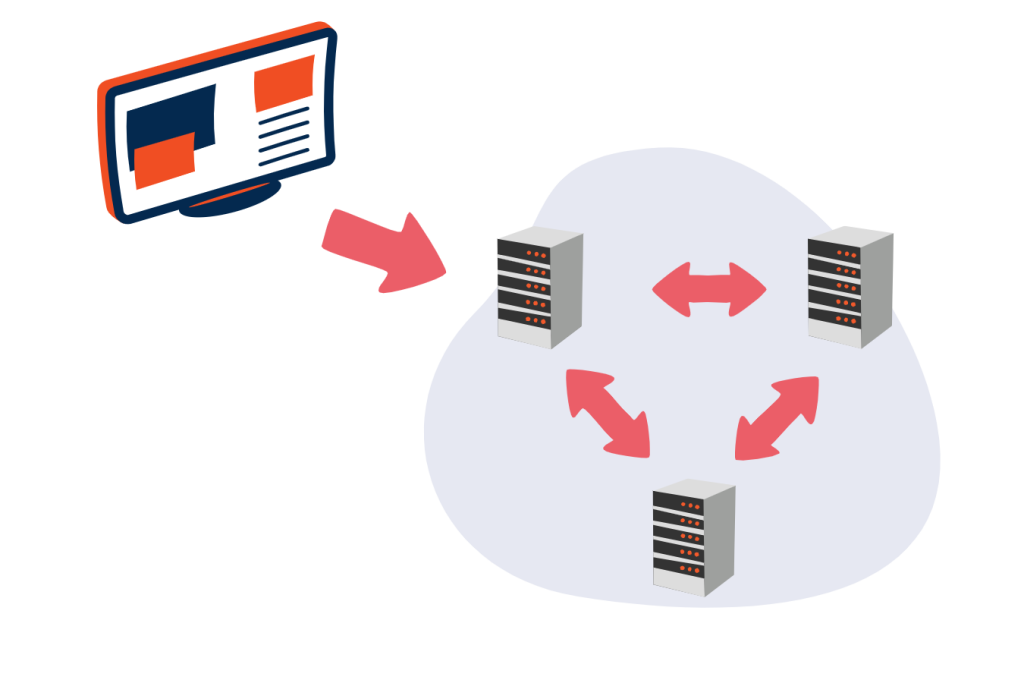

Peer-to-peer Architecture

Commonly called P2P or peer-to-peer for its English translation. This is a decentralized architecture model in which there are no differences between the network nodes, they are treated as equals. There are no nodes with special functions, and therefore no node is essential for the operation of the network. For this reason it is called peer-to-peer architecture.

Being a decentralized model, it does not have the drawbacks of a single point of contact and a single point of failure . This architecture is characterized by its scalability , fault tolerance and high availability , since all nodes can perform any function. However, this configuration requires a more complex decision-making system as it does not have a hierarchy.

Veronica is a culture reporter at Collaborative Research Group, where she writes about food, fitness, weird stuff on the internet, and, well, just about anything else. She has also covered technology news and has a penchant for smartphone stories. .

Leave a reply

Your email address will not be published. Required fields are marked *Recent post

What is acrotray.exe? Process / File: acrotray.exe