The computer is the most advanced and efficient computing machine ever invented. We explain and summarize the history of the computer, how they were the first to be invented and what are their main characteristics.

Computer History

The history of the computer is the account of the events, innovations and technological developments in the field of computing and automation, which gave rise to the machines that we know as computers, computers or computers. It also records its improvement and updating until it reaches the miniaturized and fast versions of the 21st century.

Computers, as we all know , are the most advanced and efficient computing machines ever invented by man . They are endowed with sufficient operating power, sufficient autonomy and speed to replace you in many tasks, or to allow you virtual and digital work dynamics that have never been possible before in history.

The invention of this type of device in the 20th century forever revolutionized the way we understand industrial processes , work , society and countless other areas of our lives. It affects from the very way we interact, to the type of information exchange operations on a global scale that we are capable of carrying out.

Computer Background

The history of the computer has a long history, dating back to the first slide rules and the first machines designed to facilitate the task of arithmetic for humans. The abacus, for example, was an important advance in the matter, created around 4,000 BC. C.

There were also much later inventions, such as Blaise Pascal's machine , known as Pascal's Machine or Pascalina, created in 1642. It consisted of a series of gears that allowed arithmetic operations to be carried out. This machine was improved by Gottfried Leibinitz in 1671 and the history of calculators began.

Human attempts to automate continued since then: Joseph Marie Jacquard invented in 1802 a system of punched cards to try to automate his looms, and in 1822 the Englishman Charles Babbage used these cards to create a differential calculating machine.

Only twelve years later (1834), he managed to innovate his machine and obtain an analytical machine capable of the four arithmetic operations and of storing numbers in a memory (up to 1,000 50-digit numbers). For this reason, Babbage is considered the father of computing , since this machine represents a leap into the world of computing as we know it.

Invention Of The Computer

The invention of the computer cannot be attributed to one person . Babbage is considered as the father of the branch of knowledge that will later be computation, but it will not be until much later that the first computer will be made as such.

Another important founder in this process was Alan Turing , creator of a machine capable of calculating anything, and which he called "universal machine" or "Turing machine". The ideas that served to build it were the same that later gave birth to the first computer.

Another important case was that of ENIAC ( Electronic Numeral Integrator and Calculator , that is, Integrator and Electronic Numeral Calculator), created by two professors at the University of Pennsylvania in 1943, considered the grandfather of computers proper. It consisted of 18,000 vacuum tubes that filled an entire room.

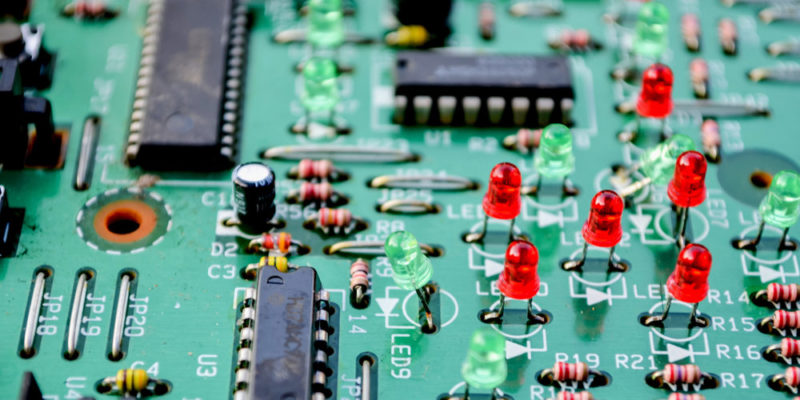

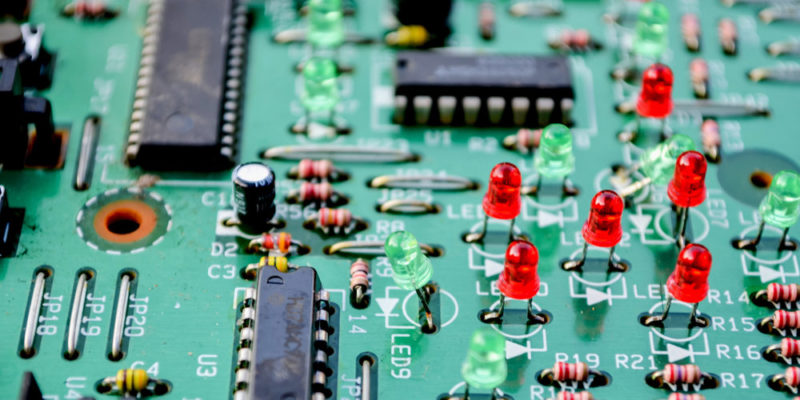

Invention Of Transistors

The history of computers would not have had the course it had without the invention of transistors in 1947, the result of the efforts of Bell Laboratories in the United States. These devices are electrical switches made of solid materials and without the need for vacuum.

This discovery was fundamental for the manufacture of the first microchips , and they allowed the passage from electrical devices to electronic ones. The first integrated circuits (that is, chips) appeared in 1958, the result of the efforts of Jack Kilby and Robert Noyce. The former received the Nobel Prize in Physics in 2000 for the find.

The First Computer

The first computers emerged as logic calculation machines , due to the needs of the Allies during World War II . To decode the transmissions of the warring sides, calculations had to be made quickly and constantly.

For this reason, Harvard University designed in 1944 the first electromechanical computer , with the help of IBM, named Mark I. It was about 15 meters long and 2.5 meters high, wrapped in a glass and stainless steel case. It had 760,000 parts, 800 kilometers of cables and 420 control switches. He served for 16 years.

At the same time, in Germany , the Z1 and Z2 , similar computer test models built by Konrad Zuse, had been developed , who completed his fully operational Z3 model, based on the binary system. It was smaller and cheaper to build than its American competitor.

The First Commercial-use Computer

In February 1951 the Ferranti Mark 1 appeared , a modern version of the North American computer of the same name that was commercially available. It was extremely important in the history of the computer, as it had an index of records, which allowed easier reading of a set of words in memory.

For that reason, up to thirty-four different patents arose from its development. In later years it served as the basis for the construction of IBM computers , very successful industrially and commercially.

The First Programming Language

In 1953 FORTRAN appeared , an acronym for The IBM Mathematical Formula Translation ("Translation of IBM mathematical formulas"), developed as the first formal programming language, that is, the first program designed to make computer programs, by IBM programmers. led by John Backus.

It was initially developed for the IBM 704 computer , and for a wide range of scientific and engineering applications, which is why it had a wide series of versions throughout half a century of implementation. It is still one of the two most popular programming languages, especially for the world's supercomputers.

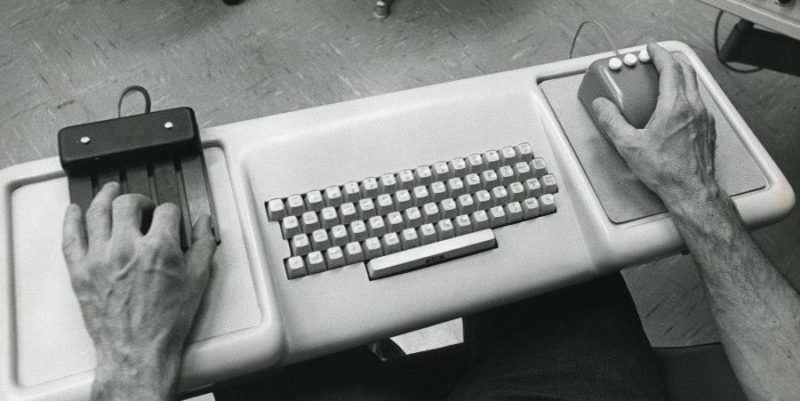

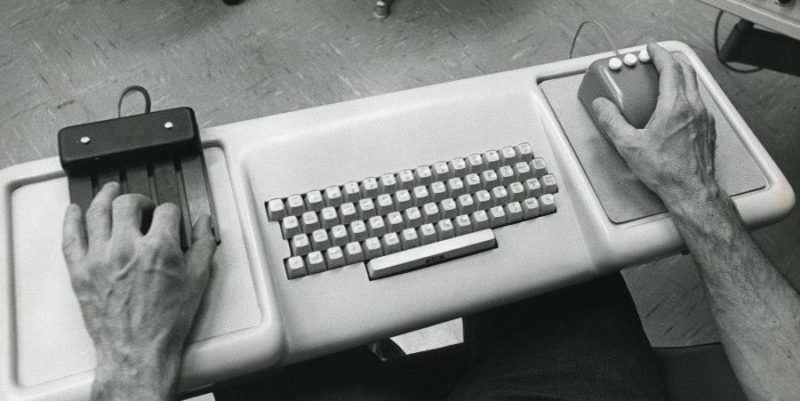

The First Modern Computer

The first modern computer appeared in the fall of 1968, as a prototype presented by Douglas Engelbart. It had for the first time a mouse or pointer, and a graphical user interface (GUI), forever changing the way users and computerized systems would interact from now on.

The presentation of the Engelbart prototype lasted 90 minutes and included an on-screen connection with its research center , thus constituting the first videoconference in history. The Apple and then Windows models were later versions of this first prototype.

Secondary Storage Devices

The first device for exchanging information between one computer and another were the Floppy diskettes, created in 1971 by IBM . They were black squares of flexible plastic , in the middle of which was a magnetizable material that made it possible to record and retrieve information. There were several types of floppy disks:

- 8 inches: The first to appear, bulky and with a capacity between 79 and 512 kbytes.

- 5 ¼ inches: Similar to the 8-inch but smaller, they stored between 89 and 360 kbytes.

- 3 ½ inches: Introduced in the 1980s, they were rigid, colored, and much smaller, with a capacity of between 720 and 1440 kbytes.

There

were also

high and low-density versions, and numerous cassette variants. At the end of the 80s, the appearance and massification of the compact disc (CD) completely replaced the format, increasing the speed and capacity of data recovery.

Finally, at the turn of the century, all these device forms became obsolete and

were replaced by the pendrive or removable flash memory , of varied capacity (but much higher), high speed and extreme portability.

The First Computer Networks

The first computer network in the world

was ARPANET, created in 1968 by the United States Department of Defense. It served as a rapid platform for the exchange of information between educational and state institutions, probably for military purposes.

This network was developed, updated and eventually

became the backbone of the Internet , already open to the general public, at least until 1990.

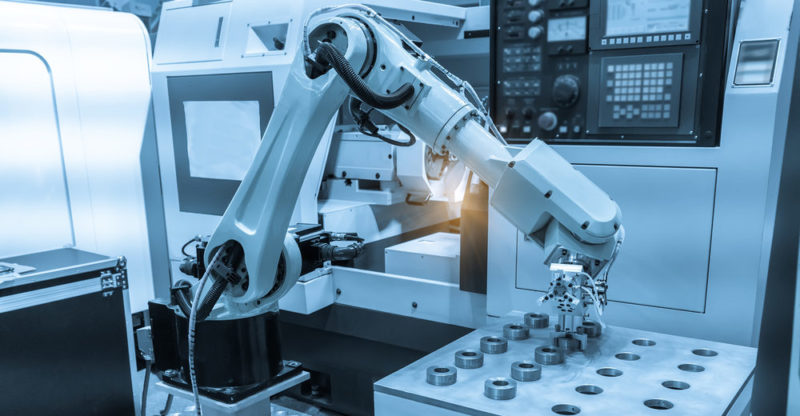

Computers Of The 21st Century

Computers

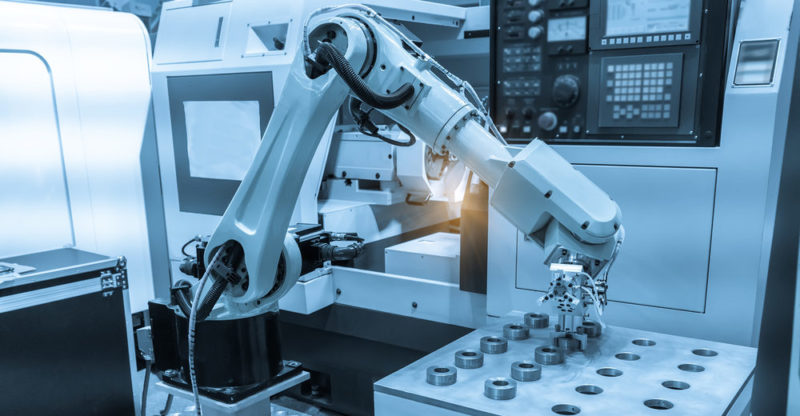

are now part of everyday life , to the point that for many a world without them is already inconceivable. They are found in our offices, on our cell phones , in various household appliances, in charge of automated installations, and performing endless operations automatically and independently.

This

has many positive aspects, but it also has many fears. For example, the emergence of robotics, the natural next step for computing, promises to put many human workers out of work, overwhelmed by the capacity for automation that is increasing and faster every day.

The above content published at Collaborative Research Group is for informational and educational purposes only and has been developed by referring reliable sources and recommendations from technology experts. We do not have any contact with official entities nor do we intend to replace the information that they emit.

Abubakr Conner brings a diverse skill set to our team, and covers everything from analysis to the culture of food and drink. He Believes: "Education is the most powerful weapon that exists to change the world."

.

Computers are now part of everyday life , to the point that for many a world without them is already inconceivable. They are found in our offices, on our cell phones , in various household appliances, in charge of automated installations, and performing endless operations automatically and independently.

Computers are now part of everyday life , to the point that for many a world without them is already inconceivable. They are found in our offices, on our cell phones , in various household appliances, in charge of automated installations, and performing endless operations automatically and independently.